AMD challenges Nvidia with MI300 AI chips

09 Dec 2023

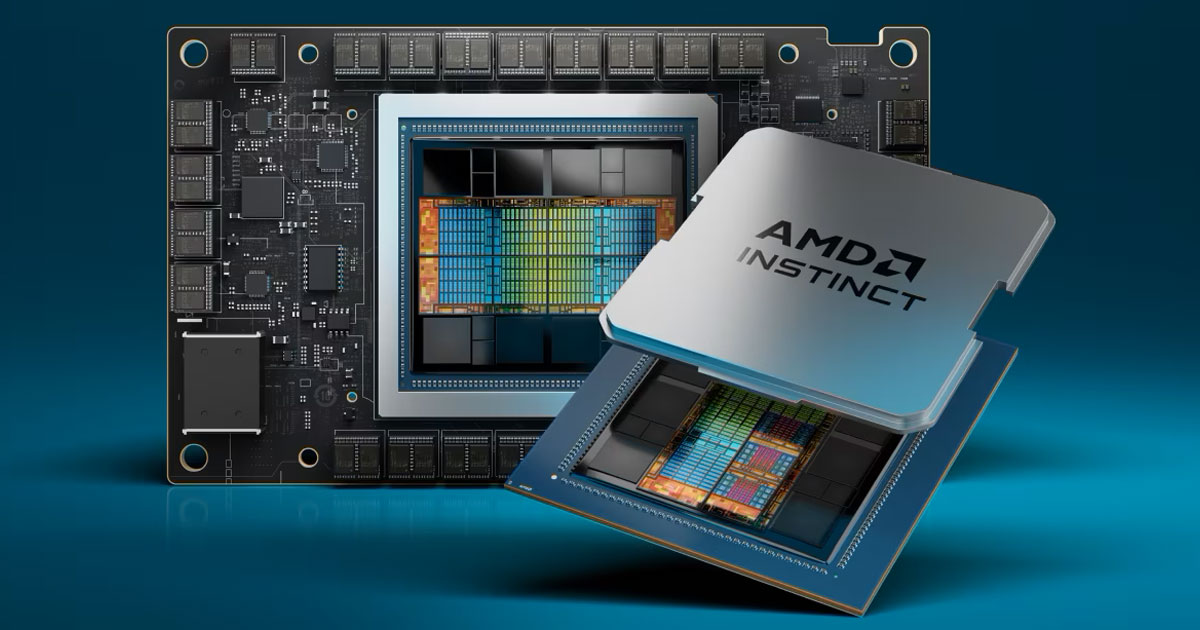

AMD on Wednesday launched the AMD Instinct MI300 series data center AI accelerators, along with several new products, including the ROCm 6 open software stack with significant optimisations and new features supporting Large Language Models (LLMs) and Ryzen8040 series processors with Ryzen AI.

AMD announced the launch at the `Advancing AI’ event, in the presence of representatives of industry leaders, including Microsoft, Meta, Oracle, Dell Technologies, HPE, Lenovo, Supermicro, Arista, Broadcom and Cisco, to showcase how these companies are working with AMD to deliver advanced AI solutions spanning from cloud to enterprise and PCs.

The MI300 chips, which are available in systems now, come with better memory and AI inference capabilities than Nvidia’s H100. And are already supported by industry leaders, posing a big challenge to Nvidia’s AI computing dominance.

“AI is the future of computing and AMD is uniquely positioned to power the end-to-end infrastructure that will define this AI era, from massive cloud installations to enterprise clusters and AI-enabled intelligent embedded devices and PCs,” said AMD chair and CEO Lisa Su.

“We are seeing very strong demand for our new Instinct MI300 GPUs, which are the highest-performance accelerators in the world for generative AI. We are also building significant momentum for our data center AI solutions with the largest cloud companies, the industry’s top server providers, and the most innovative AI startups ꟷ who we are working closely with to rapidly bring Instinct MI300 solutions to market that will dramatically accelerate the pace of innovation across the entire AI ecosystem,” she added.

Microsoft said it is deploying AMD Instinct MI300X accelerators to power the new Azure ND MI300x v5 Virtual Machine (VM) series optimised for AI workloads.

Meta said the company is adding AMD Instinct MI300X accelerators to its data centers in combination with ROCm 6 to power AI inferencing workloads and recognised the ROCm 6 optimisations AMD has done on the Llama 2 family of models.

The latest version of AMD’s open-source software stack, ROCm 6 has been optimised for generative AI, particularly large language models.

Oracle said it is planning to offer OCI bare metal compute solutions featuring AMD Instinct MI300X accelerators, while also including AMD Instinct MI300X accelerators in their upcoming generative AI service.

Dell announced the integration of AMD Instinct MI300X accelerators with their PowerEdge XE9680 server solution to deliver groundbreaking performance for generative AI workloads in a modular and scalable format for customers.

HPE announced plans to bring AMD Instinct MI300 accelerators to its enterprise and HPC offerings.

Lenovo shared plans to bring AMD Instinct MI300X accelerators to the Lenovo ThinkSystem platform to deliver AI solutions across industries including retail, manufacturing, financial services and healthcare.

Supermicro announced plans to offer AMD Instinct MI300 GPUs across their AI solutions portfolio. Asus, Gigabyte, Ingrasys, Inventec, QCT, Wistron and Wiwynn also all announced plans to offer solutions powered by AMD Instinct MI300 accelerators.

Specialized AI cloud providers including Aligned, Arkon Energy, Cirrascale, Crusoe, Denvr Dataworks and Tensorwaves all plan to provide offerings that will expand access to AMD Instinct MI300X GPUs for developers and AI startups.

OpenAI is adding support for AMD Instinct accelerators to Triton 3.0, providing out-of-the-box support for AMD accelerators that will allow developers to work at a higher level of abstraction on AMD hardware.